Category: exchange

-

Gmail showing “via” in Microsoft 365 Email Headers

I came across this issue the other day. In the headers of an email received from Microsoft 365 / Exchange Online into Gmail (and not other recipients) the message header reads “name@domain via otherdomain.onmicrosoft.com”, for example: In this case the via header for onmicrosoft.com was an old organization name and as tenant rename does not…

-

Finding Existing Plus Addresses

Exchange Online will automatically enable “Plus Addressing” for all tenants from Jan 2022. This change may cause issues if you have existing mailboxes where the SMTP address contains a + sign. That is, directors+managers@contoso.com would be considered a broken email address from Jan 2022 in Exchange Online. So you need to check you have no…

-

Outlook AutoDetect And Broken AutoDiscover

Those in the Exchange Server space for a number of years know all about AutoDiscover and the many ways it can be configured and misconfigured – if even configured at all. Often misconfiguration is to do with certificates or it is not configured at all because it involves certificates and I thought I was aware…

-

550 5.1.8 Access denied, bad outbound sender AS(42003)

“Your message couldn’t be delivered because you weren’t recognized as a valid sender. The most common reason for this is that your email address is suspected of sending spam and it’s no longer allowed to send email. Contact your email admin for assistance.” This is an error you get when your anti-spam “outbound” policy restricts…

-

[New] External Email Notification in Exchange Online

This is a new feature released in March 2021 that adds support in Outlook (Mac, OWA, Mobile) for the display of the external status of the sender – note at the time of writing it does not add this feature to Outlook for the PC. This should be used to replace the way this has…

-

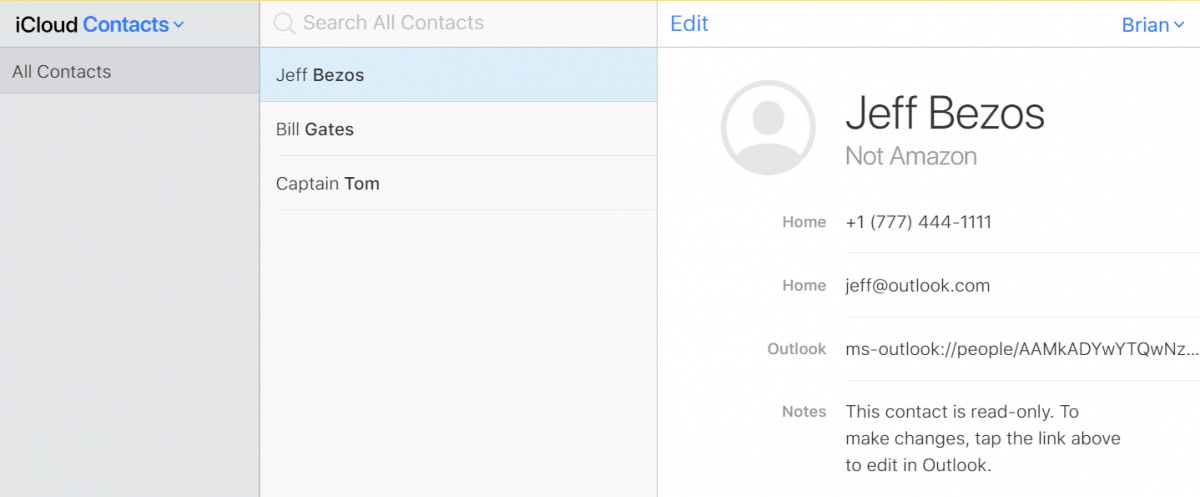

iOS and Outlook Mobile and Duplicate Contacts

Of the back of a few conversations recently on having duplicate contacts in the iOS platform because of syncing via multiple different routes or devices I decided to try to reproduce the issues and see what I could work out. I looked on my test iPhone to see if I could see any duplicates and…

-

Why Do Comments In Microsoft 365 Planner Disappear?

So first you need an Exchange Online mailbox for comments to work. Comments to the tasks of Plans are stored in the Microsoft 365 Group mailbox, and you need an Exchange Online mailbox to access the M365 Group mailbox. Behind the scenes, or actually not that behind the scenes, the process for comments is as…

-

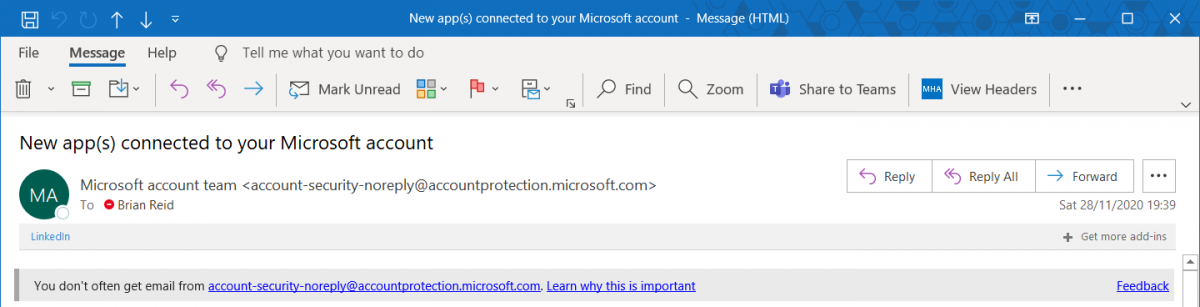

Exchange Online Warning On Receipt Of New Email Sender

Released recently to no fanfare at all, Microsoft now has a SafetyTip that appears if you receive email from a first time recipient. Most often phish emails will come from an address you have never received email from before, and sometimes this email will try to impersonate people you communicate with or are internal to…

-

Microsoft 365 From A Raspberry Pi 400 Personal Computer

So my new computer arrived today, its a keyboard and a few cables, and as my first computer was a ZX Spectrum when I was 14, this brings back a few memories. But, is it usable today with services such as Microsoft 365? Lets see… First, the actual computer is in the keyboard, but its…

-

Enabling Better Mail Flow Security for Exchange Online

At Microsoft Ignite 2020, Microsoft announced support for MTA-STS, or Mail Transfer Agent Strict Transport Security. This is covered in RFC 8461 and it includes making TLS for mail flow to your domains mandatory whereas it is currently down to the decision of the sender. You can publish your SMTP endpoint and offer the STARTTLS…

-

Reporting on MTA-STS Failures

This article is a follow up to the Enabling Better Mail Flow Security for Exchange Online which discusses setting up MTA-STS and in this article we cover the reporting for MTA-STS. To get daily reports from each sending infrastructure to receive reports on MTA-STS you just create a DNS record in the following format: It…

-

Enable EOP Enhanced Filtering for Mimecast Users

Enhanced Filtering is a feature of Exchange Online Protection (EOP) that allows EOP to skip back through the hops the messages has been sent through to work out the original sender. Take for example a message from SenderA.com to RecipientB.com where RecipientB.com uses Mimecast (or another cloud security provider). The MX record for RecipientB.com is…

-

Mail Flow To The Correct Exchange Online Connector

In a multi-forest Exchange Server/Exchange Online (single tenant) configuration, you are likely to have multiple inbound connectors to receive email from the different on-premises environments. There are scenarios where it is important to ensure that the correct connector is used for the inbound message rather than any of your connectors. Here is one such example.…

-

Teams Calendar Fails To On-Premises Mailbox

Article Depreciated: Microsoft now auto-hides the Calendar icon in Teams if your on-premises Exchange Server is not reachable via AutoDiscover V2 and at least Exchange Server 2016 CU3 or later. Once you move your mailbox to Exchange Online (or a supported on-premises version), assuming you did not do any of the below, your Calendar icon…

-

Too Many Folders To Successfully Migrate To Exchange Online

Exchange Online has a limit of 10,000 folders within a mailbox. If you try and migrate a mailbox with more than this number of folders then it will fail – and that would be expected. But what happens if you have a mailbox with less than this number of folders and it still fails for…

-

bin/ExSMIME.dll Copy Error During Exchange Patching

I have seen a lot of this, and there are some documents online but none that described what I was seeing. I was getting the following on an upgrade of Exchange 2013 CU10 to CU22 (yes, a big difference in versions): The following error was generated when “$error.Clear(); $dllFile = join-path $RoleInstallPath “bin\ExSMIME.dll”; $regsvr…

-

CRM Router and Dynamics CRM V9 Online–No Emails Being Processed

This one is an interesting one – and it was only resolved by a call to Microsoft Support, who do not document that this setting is required. The scenario is that you upgrade your CRM Router to v9 (as this is required before you upgrade Dynamics to V9) and you enable TLS 1.2 on the…

-

Exchange Server Dependency on Visual C++ Failing Detection

Exchange Server for rollup updates and cumulative updates at the time of writing (Feb 2019) has a dependency on Visual C++ 2012. The link in the error message you get points you to the VC++ 2013 Redistributable though, and there is are later versions of this as well. I found that by installing all versions…

-

Read Only And Attachment Download Restrictions in Exchange Online

Microsoft have released a tiny update to Exchange Online that has massive implications. I say tiny in that it take like 30 seconds to implement this (ok, may 60 seconds then). When this is enabled, and below I will describe a simple configuration for this, your users when using Outlook Web Access on a computer…