[Updated 10th Nov 2015 with tips on managing bad items in PST files]

Its been in private preview for a while, and recently entered a free preview for any Office 365 subscriber to try. So I gave it a go and have the following tips and guidance.

Preparing to upload PST files

You can upload PST files in situ from their current location on the network. There is no requirement to first copy them to a new folder for uploading. To do this requires a few things to consider, not just including running the AzCopy process with an account that can access all the content.

AzCopy is the command line tool used to copy your PST files to Azure in advance of importing them into Office 365 mailboxes. You do not need an Azure subscription to do this, and until September 2015 this is a free service. To do this in-situ upload of PST files without first copying them to a local network staging location you should include the /Pattern: property in AzCopy. This is documented in the AzCopy help but not currently in the PST Ingestion help on TechNet (https://technet.microsoft.com/library/ms.o365.cc.IngestionHelp.aspx?v=15.1.166.0&l=1). Using AzCopy without –include-pattern will upload everything in the source path. As this is a PST ingestion process, you only want *.pst as the –include-pattern. When this ingestion process starts to include uploads for SharePoint, then /Pattern will of course not be as useful a value to include.

In the following example, AzCopy is reading from a folder called “C:\Shares\Users” and looking in all subdirectories (–recursive) and only uploading *.pst files (“*.pst” in the –include-pattern switch).

azcopy copy "C:\Shares\Users\" "https://uniqueurl.blob.core.windows.net/ingestiondata/20150101?sv=?... --recursive --include-pattern="*.pst"

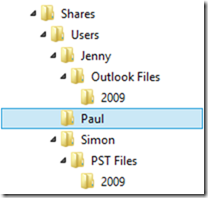

The data is uploaded to a folder called ingestiondata/20150101 in your Azure storage blog for the PST Ingestion process. Each file is uploaded to a subfolder of this folder that matches the folder it is located on in the source. For example, if the following folder structure existed:

Then in Azure storage the structure would be like the following:

ingestiondata/20150101/Jenny/Outlook Files/2009/jenny2009.pst

ingestiondata/20150101/Paul/archive.pst

ingestiondata/20150101/Simon/PST Files/2009/SimonArchive.pst

ingestiondata/20150101/Simon/Archive2011.pst

Notice that the folder structure underneath the /Source: path is duplicated to Azure, and for a real world scenario, notice that Simon has two PST files in different folders. The /Pattern property of AzCopy will find both even though they may not be where you expect them to be. The 20150101 value is just a unique value that I have used (its a date) that I would change for different uploads, meaning that different uploads would never clash with an existing upload. In TechNet it suggests a name that represents the file share that you set as the source value, so that two uploads from two sources cannot over write each other. So in my example I might do an upload on a different day, and so use a different data value or I could use CUserShares as a way to represent the local upload and FileServerHome to represent \\fileserver\home. If I used FileServer/Home (changing \ for /) then I am creating additional subdirectories in Azure storage and this needs to be taken into account.

Preparing the PST Mapping File

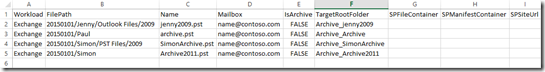

Once the upload is complete, and note that this is best done overnight as it maximises bandwidth use, you have 30 days to import the files from Azure into your mailboxes. To do this you need to create a CSV file like the following:

Workload,FilePath,Name,Mailbox,IsArchive,TargetRootFolder,ContentCodePage,SPFileContainer,SPManifestContainer,SPSiteUrl

Exchange,20150101/Jenny/Outlook Files/2009,jenny2009.pst,jenny@contoso.com,FALSE,Archive_jenny2009,,,,

Exchange,20150101/Paul,archive.pst,paul@contoso.com,FALSE,Archive_Archive,,,,

Exchange,20150101/Simon/PST Files/2009,SimonArchive.pst,simon@contoso.com,FALSE,Archive_SimonArchive,,,,

Exchange,20150101/Simon,Archive2011.pst,simon@contoso.com,FALSE,Archive_Archive2011,,,,

In Excel, it would look as follows:

This has a few important elements in it. Mainly the Name value (for the PST filename) is case sensitive which is not documented in TechNet at this time. I guess the FilePath is as well, but I did not come across that issue as I set all the case to the same as the source. The name matches the PST filename, and the FilePath matches the value after “ingestiondata” in the URL including the path the file was uploaded from. Therefore in my example for Jenny above, where the PST file was called “jenny2009.pst” and the path on the local file server was “C:\Shares\Users\Jenny\Outlook Files\2009\” and the /Source: was “C:\Shares\Users” and 20150101 was used as the value in the /Dest: following the URL, the result of the FilePath in the CSV becomes “20150101/Jenny/Outlook Files/2009”. That is, the CSV needs to have a FilePath that includes Dest (after ingestiondata) and the local source with \ changed to / and not including the /Source: value.

A second example, if I used the following AzCopy cmdline:

azcopy copy "\\fileserver\home" "https://uniqueurl.blob.core.windows.net/ingestiondata/FileServer/home/?..." --include-pattern:"*.pst"Then I would have FilePath values in the CSV that looked like “FileServer/home/Jenny/Outlook Files/2009” (case sensitive).

Once you upload the mapping file the PST import from Azure to the Exchange mailbox (or Archive) starts. If the PST file cannot be found then you get an error in the management console at quite shortly after starting. The error reads as follows:

Could not find source file {0}. Please correct the FilePath column in the mapping file and create a new job with the updated mapping file

Full file path

fileserver/home/Jenny/outlook files/2009/Jenny2009.pst

In the above error I have purposely set the FilePath and PST file to the wrong case as that is the cause of this error (unless you did not upload the PST or the path is completely wrong). The best source of the FilePath name comes from the AzCopy log file (set in the /V switch for AzCopy). This will show the path (not including the string value used after “ingestiondata” in the Dest switch that you need to add), but will show the full path the file was uploaded to and the correct case for this path and file.

All the best with removing PST’s from the network! Of course there is more to do that just mentioned here – you need to find them, work out who the PST’s belong to and create this mapping file accurately. There are a number of PST ingestion software companies who will do this for you. You also need to ensure that the PST’s do not contain bad items and to control the import settings for the PST import process.

To ensure there are no bad items in the PST files (or try to at least) it is recommended that you scan the PST files with SCANPST.EXE (http://support.microsoft.com/en-us/kb/272227). This tool needs to be run on all PST files that you have located before you upload them, or if bandwidth is not an issue, to upload them, import them and then process only those that fail.

Once SCANPST.EXE is complete, upload the new PST file and import it again (probably a new mapping file needed). Then also tell the PST Ingestion service that it is to continue processing items even if it finds bad items. To do this you need to configure a custom BadItemLimit once the import starts (as the current BadItemLimit default is 0, which means to fail at the first bad item. You will get “TooManyBadItemsPermanentException” errors in the import log file if you need to do this. To set the BadItemLimit use either of the following:

- Connect to Exchange Online via remote PowerShell

- Get-MailboxImportRequest | FL name, mailbox, status, whencreated, requestguid

- This returns a list of import requests. Look for the most recent and get the requestguid value.

- Set-MailboxImportRequest -Identity “request-guid-found-above” -BadItemLimit unlimited –AcceptLargeDataLoss

Or you can just set the BadItemLimit the same for all imports without looking for the latest one

$all_import_requests = Get-Mailboximportrequest

Foreach ($import_request in $all_import_requests)

{

Set-Mailboximportrequest -identity ($import_request).requestguid -BadItemLimit unlimited –AcceptLargeDataLoss

}

Leave a Reply